TEXT PROCESSING AND ITS RELEVANCE TO SURVEY RESEARCH

One of the important tasks performed in a survey research study is the processing of the texts collected, whether they are short repetitive texts or long opinion texts. Typically this task is performed in market research companies by one or several people, whose role in the organization has been called the CODER. The task of the coder (or classifier) is to transform the various texts captured into closed categories, as if they were single choice questions. Although it seems to be a simple task, the coder must have the intellectual and heuristic capacity and the intuition to be able to classify the various responses into closed categories that encompass and reflect the opinion of the respondents.

HUMAN PROCEDURE FOR CODING SURVEY OPINION TEXTS

When classifying the texts of a survey, the coder must make a quick and diagonal reading of all the texts, or at least of a representative sample of them selected at random. Thus, for example, if we have a survey conducted on a sample of 5,000 people, reading each and every one of the responses to find out which are the most relevant themes in terms of repetition and frequencies of ideas, could be a very long and frustrating task. Therefore, the researcher must first select a random group of texts that are manageable for the human eye, such as 100 of them, and after this quick diagonal reading, the coder must write down the general descriptions of what he/she has read. Trying to visualize the possible categories to which to attribute these texts. Then the coder must read each text, one by one, and place it within one of the closed categories he had initially visualized, if the text read does not fall into any of the categories, a new category will be created to place it.

TYPICAL PROBLEMS FOUND IN A SURVEY REGARDING TO TEXT PROCESSING

There are many problems and disadvantages related to the manual coding of texts explained in the previous paragraph. The first of these is the handling of the large volume of data collected in the survey, a problem that can be reduced by incorporating several people to the work in the team, i.e., instead of assigning all the coding work to a single person, this could be distributed among many coders, thus cutting processing time and better organizing the task to meet the delivery times set out in the study schedule. Apart from the volume and speed of processing, the second problem that we observed in manual coding is to be able to assign the text to multiple categories, this usually occurs because when dealing with broad opinion texts, it is possible that there are two or more idstyle=" text-align: center;ame text, and not just one as might be expected, so the coder must assign the opinion text to multiple predefined closed categories, thus generating, not a single choice variable, but a multiple choice variable, whose processing could be somewhat more complex.

The third problem encountered, apart from those already mentioned, is what to do when the text we are coding comes from a single or multiple choice question. For example, if the survey had asked "What is your favorite ice cream flavor?", being the answer options CHIOCOLATE, BUTTER, STRAWBERRY AND OTHER SPECIFY, and let's assume that we are classifying the texts that come from that OTHER SPECIFY, there we will find flavors such as tangerine, tamarind, coconut, etc., But what happens when we read the text DARK CHOCOLATE?, in that case we should delete that text from the database and increase a count for CHOCOLATE. This situation is a real karma for the coders, because their work must be coordinated with the field team to make corrections to the database, in order to solve this borderline situation.

SOFTWARE AND TOOLS FOR CLASSIFYING (OR CODING) SURVEY TEXTS

Since the late 70s of the last century, market research industry has been thinking about algorithms and tools to perform the hard work of coding open-ended texts from surveys and be able to convert them into closed categories as quickly, friendly and efficiently as possible (all within the framework of a public opinion research project carried out by a market research firm). However, at present time and already entering the 2020s, little has been achieved in this regard, beyond various utilities that allow speeding up and optimizing the coding process. However, today the coding task is largely done manually or semi-automatically. Thus, the SPSS tool has been one of the few software tools that has dared to offer automatic options for classifying large volumes of texts, using algorithms for building decision trees and cluster analysis, as well as other statistical techniques that had already been used in the 1990s for the creation of taxonomies, group analysis and market segmentation, etc. through the application of advanced statistical algorithms.

The result generated by the SPSS Modeler Text Analytics application on a sample of 10,000 texts collected in an opinion survey is really poor when compared to the work done manually by a human, or at least that is the result we observed in the Spanish language. When the collected texts are in English, a better automatic coding could be obtained by means of this software, therefore some SPSS Text Analytics users opted to translate all the survey texts into English in bulk and from there they passed it to SPSS Text Analytics. The result of this process is that the automatic work is of poor quality, when compared to the manual work done by human coders. All this has led to companies continuing to use manual coding systems, but now with advanced aids such as RotatorSurvey's batch sorting to reduce work times and increase the efficiency and speed of manual work.

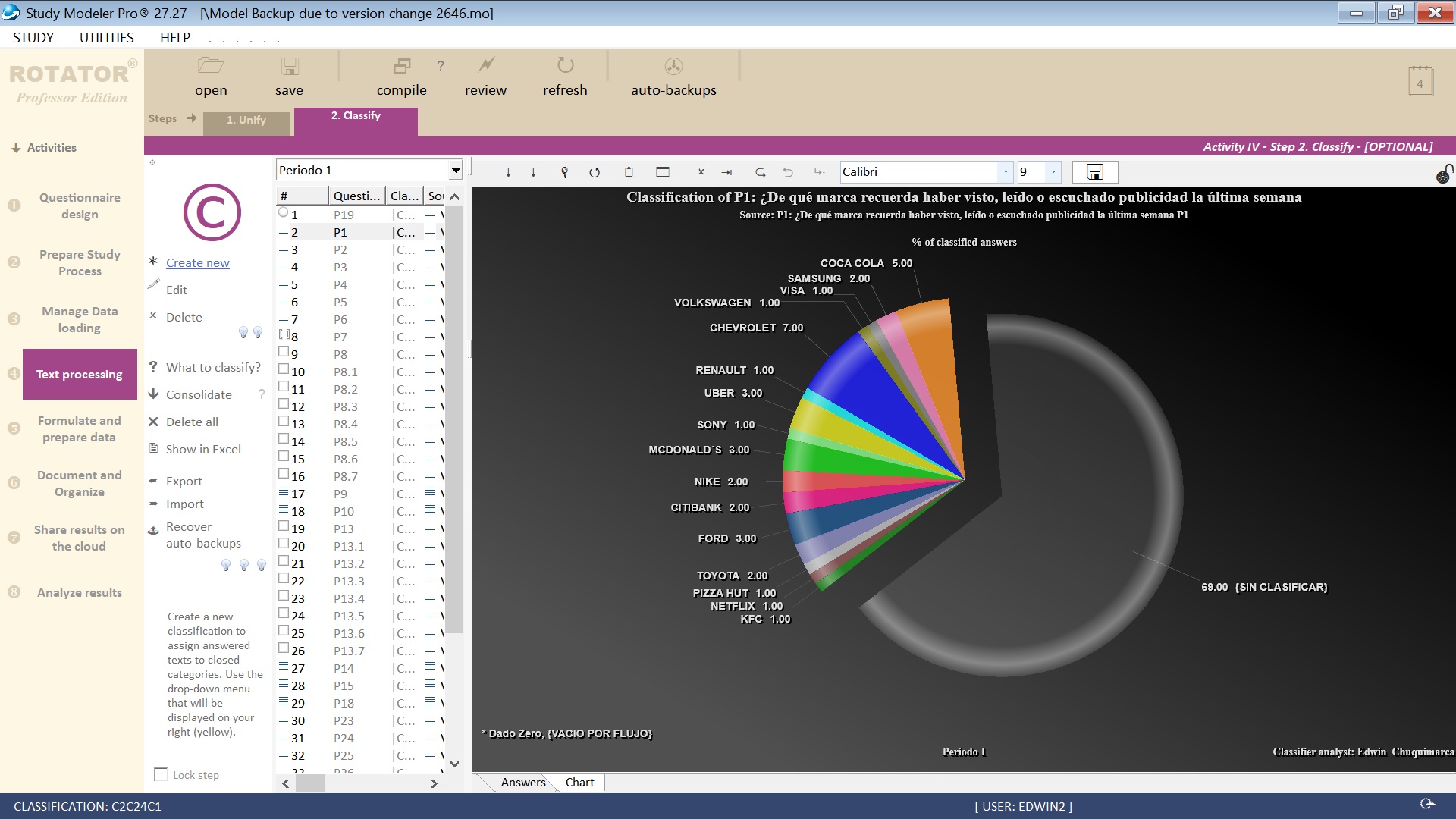

Screen view of the Rotator Classifier module by 2020

ARTIFICIAL INTELLIGENCE APPLIED TO OPINION SURVEY TEXT PROCESSING

The next stage we will see in the next decade is the application of artificial intelligence (AI) heuristic algorithms, intelligent devices capable of analyzing large volumes of textual content in seconds, learning human logic and proposing multiple classification alternatives, all with irrefutable logic, similar or superior to that of a human expert. Given this promising outlook, the coder's job will be reduced to doing quality reviews and adjusting system parameters, as well as training the expert systems to learn so. Despite this expectation and the current state of the art of artificial intelligence, the truth is that the replacement of the human mind to close open questions of a survey, will not be possible in the medium or long term, the reason is because artificial intelligence systems by definition, require thousands (or millions) of data records for self-training. And if we do not have large studies prior to ours, which contain all the variability of the desired information, we will have a poor codification of the study. In short, there is still a huge technological gap to obtain automatic categories with no or minimal human intervention and with a perfect interpretation of the socio-cultural phenomena typically studied in surveys.

Documents for further discussion

Video: Introduction to SPSS Text Analytics

Video: Using IBM SPSS Modeler with Text Analytics

Video: The Future of Analytics: Machine Learning and Data Analytics